How to Manage Simultaneous Uploads in Dropbox Mac -photos -camera

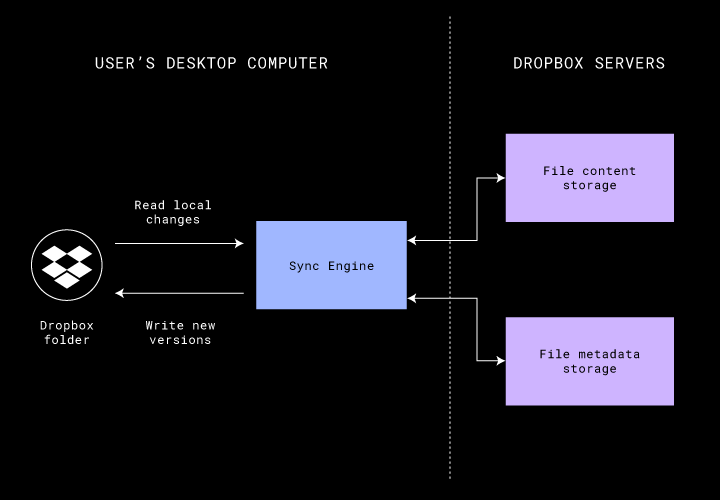

Over the past four years, we've been working hard on rebuilding our desktop client's sync engine from scratch. The sync engine is the magic behind the Dropbox folder on your desktop computer, and it'due south one of the oldest and most of import pieces of code at Dropbox. We're proud to denote today that nosotros've shipped this new sync engine (codenamed "Nucleus") to all Dropbox users.

Rewriting the sync engine was really difficult, and we don't want to blindly celebrate it, because in many environments it would have been a terrible idea. It turned out that this was an excellent thought for Dropbox only only because we were very thoughtful about how we went about this process. In particular, we're going to share reflections on how to call up about a major software rewrite and highlight the key initiatives that made this project a success, like having a very clean data model.

To begin, let's rewind the clock to 2008, the year Dropbox sync first entered beta. At first glance, a lot of Dropbox sync looks the same as today. The user installs the Dropbox app, which creates a magic folder on their computer, and putting files in that folder syncs them to Dropbox servers and their other devices. Dropbox servers store files durably and securely, and these files are accessible anywhere with an Cyberspace connection.

Since then we've taken file sync pretty far. We started with consumers syncing what fit on their devices for their own personal use. Now, our users bring Dropbox to their jobs, where they have admission to millions of files organized in company sharing hierarchies. The content of these files often far exceeds their computer'south local disk space, and they can now use Dropbox Smart Sync to download files just when they need them. Dropbox has hundreds of billions of files, trillions of file revisions, and exabytes of customer data. Users access their files across hundreds of millions of devices, all networked in an enormous distributed organisation.

Sync at scale is hard

Syncing files becomes much harder at scale, and understanding why is important for agreement why we decided to rewrite. Our first sync engine, which we call "Sync Engine Archetype," had cardinal issues with its data model that only showed up at scale, and these issues made incremental improvements impossible.

Distributed systems are hard

The calibration of Dropbox solitary is a hard systems engineering challenge. But putting raw calibration aside, file synchronization is a unique distributed systems problem, because clients are allowed to become offline for long periods of fourth dimension and reconcile their changes when they return. Network partitions are anomalous conditions for many distributed systems algorithms, nonetheless they are standard functioning for us.

Getting this right is important: Users trust Dropbox with their near precious content, and keeping it safe is non-negotiable. Bidirectional sync has many corner cases, and durability is harder than merely making sure we don't delete or corrupt data on the server. For example, Sync Engine Archetype represents moves as pairs of deletes at the old location and adds at the new location. Consider the case where, due to a transient network hiccup, a delete goes through but its corresponding add does not. Then, the user would encounter the file missing on the server and their other devices, even though they only moved it locally.

Durability everywhere is hard

Dropbox likewise aims to "only work" on users' computers, no matter their configuration. We support Windows, macOS, and Linux, and each of these platforms has a variety of filesystems, all with slightly different behavior. Nether the operating organisation, there'southward enormous variation in hardware, and users as well install different kernel extensions or drivers that change the beliefs within the operating system. And to a higher place Dropbox, applications all use the filesystem in different means and rely on beliefs that may non actually be office of its specification.

Guaranteeing durability in a particular environment requires understanding its implementation, mitigating its bugs, and sometimes fifty-fifty contrary-technology it when debugging product bug. These issues often only bear witness up in big populations, since a rare filesystem bug may only affect a very small fraction of users. So at scale, "just working" beyond many environments and providing potent durability guarantees are fundamentally opposed.

Testing file sync is hard

With a sufficiently big user base of operations, just about anything that's theoretically possible will happen in production. Debugging issues in production is much more expensive than finding them in development, especially for software that runs on users' devices. And so, catching regressions with automated testing before they striking production is critical at scale.

Yet, testing sync engines well is difficult since the number of possible combinations of file states and user deportment is astronomical. A shared folder may have thousands of members, each with a sync engine with varied connectivity and a differently out-of-date view of the Dropbox filesystem. Every user may accept different local changes that are pending upload, and they may have different fractional progress for downloading files from the server. Therefore, there are many possible "snapshots" of the organisation, all of which we must test.

The number of valid actions to have from a organisation state is also tremendously large. Syncing files is a heavily concurrent process, where the user may be simultaneously uploading and downloading many files at the same time. Syncing an private file may involve transferring content chunks in parallel, writing contents to disk, or reading from the local filesystem. Comprehensive testing requires trying different sequences of these actions to ensure our system is costless of concurrency bugs.

Specifying sync beliefs is hard

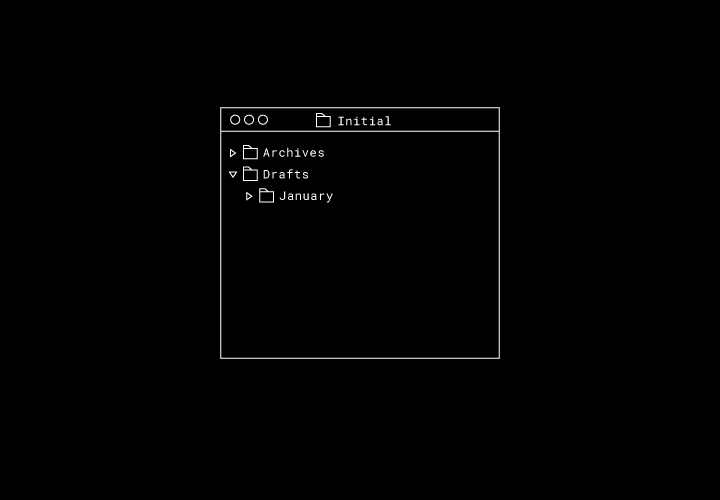

Finally, if the large country space wasn't bad enough, it'southward ofttimes hard to precisely ascertain right behavior for a sync engine. For case, consider the case where we have three folders with ane nested inside another.

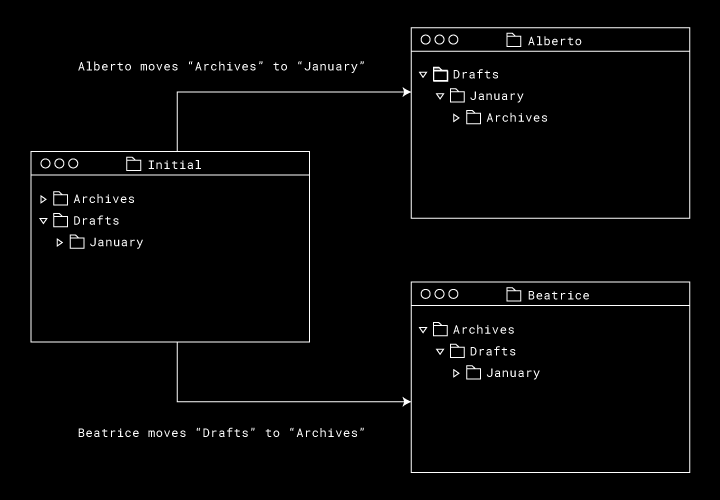

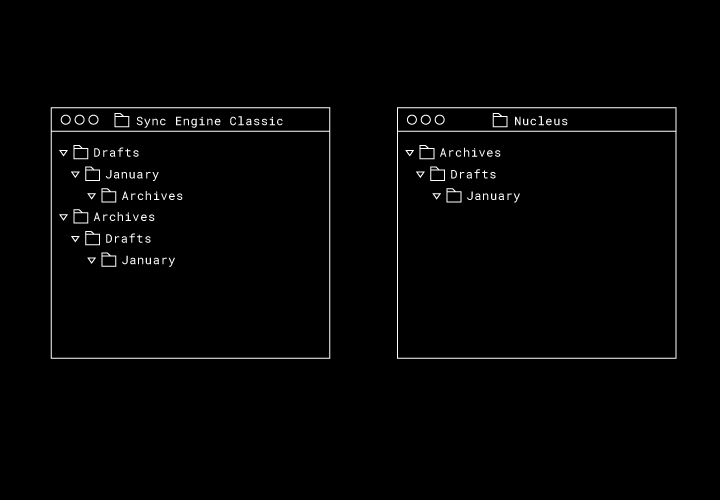

Then, let's say we have two users—Alberto and Beatrice—who are working within this folder offline. Alberto moves "Athenaeum" into "Jan," and Beatrice moves "Drafts" into "Athenaeum."

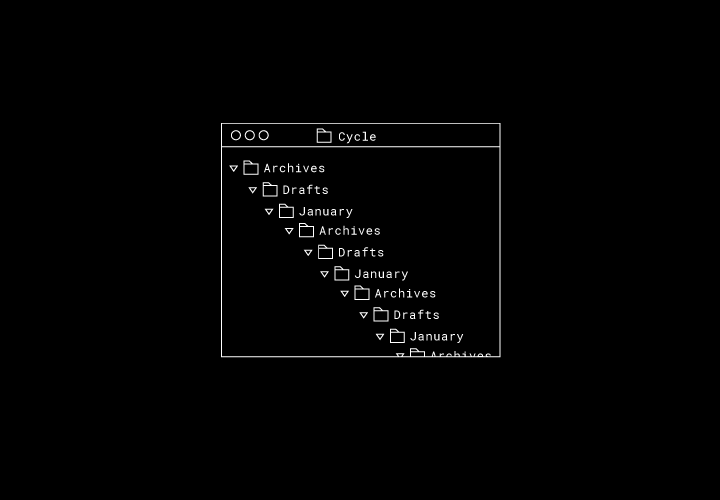

What should happen when they both come back online? If we apply these moves directly, nosotros'll have a cycle in our filesystem graph: "Athenaeum" is the parent of "Drafts," "Drafts" is the parent of "January," and "January" is the parent of "Athenaeum."

What's the correct final system state in this state of affairs? Sync Engine Classic duplicates each directory, merging Alberto's and Beatrice's directory copse. With Nucleus we keep the original directories, and the concluding lodge depends on which sync engine uploads their move showtime.

In this simple situation with three folders and two moves, Nucleus has a satisfying final state. But how do we specify sync behavior in general without drowning in a list of corner cases?

Why rewrite?

Okay, so syncing files at scale is hard. Back in 2016, it looked similar we had solved that problem pretty well. We had hundreds of millions of users, new product features similar Smart Sync on the way, and a stiff squad of sync experts. Sync Engine Classic had years of production hardening, and nosotros had spent time hunting down and fixing fifty-fifty the rarest bugs.

Joel Spolsky called rewriting code from scratch the "single worst strategic mistake that any software company can make." Successfully pulling off a rewrite oft requires slowing feature evolution, since progress made on the onetime system needs to be ported over to the new one. And, of grade, there were plenty of user-facing projects our sync engineers could have worked on.

Merely despite its success, Sync Engine Archetype was deeply unhealthy. In the course of building Smart Sync, we'd made many incremental improvements to the organisation, cleaning up ugly lawmaking, refactoring interfaces, and even calculation Python type annotations. We'd added copious telemetry and built processes for ensuring maintenance was condom and easy. Nevertheless, these incremental improvements weren't enough.

Shipping whatsoever change to sync behavior required an arduous rollout, and we'd still find complex inconsistencies in production. The team would have to drop everything, diagnose the effect, fix information technology, and then spend time getting their apps back into a good land. Fifty-fifty though nosotros had a stiff team of experts, onboarding new engineers to the system took years. Finally, we poured fourth dimension into incremental performance wins but failed to appreciably scale the total number of files the sync engine could manage.

There were a few root causes for these issues, merely the most important one was Sync Engine Archetype'due south data model. The information model was designed for a simpler world without sharing, and files lacked a stable identifier that would exist preserved across moves. In that location were few consistency guarantees, and nosotros'd spend hours debugging issues where something theoretically possible but "extremely unlikely" would show upwardly in production. Changing the foundational nouns of a system is oftentimes impossible to do in minor pieces, and we chop-chop ran out of effective incremental improvements.

Side by side, the organisation was non designed for testability. We relied on slow rollouts and debugging issues in the field rather than automated pre-release testing. Sync Engine Archetype's permissive data model meant we couldn't cheque much in stress tests, since there were big sets of undesirable notwithstanding even so legal outcomes nosotros couldn't assert against. Having a strong data model with tight invariants is immensely valuable for testing, since information technology's ever like shooting fish in a barrel to cheque if your organization is in a valid state.

Nosotros discussed in a higher place how sync is a very concurrent problem, and testing and debugging concurrent code is notoriously difficult. Sync Engine Archetype's threading-based architecture did not help at all, handing all our scheduling decisions to the OS and making integration tests not-reproducible. In practice, we concluded up using very coarse-grained locks held for long periods of time. This architecture sacrificed the benefits of parallelism in society to brand the organization easier to reason nearly.

A rewrite checklist

Let's distill the reasons for our determination to rewrite into a "rewrite checklist" that can aid navigate this kind of decision for other systems.

Have you exhausted incremental improvements?

□

Accept you tried refactoring code into ameliorate modules?

Poor code quality alone isn't a neat reason to rewrite a organization. Renaming variables and untangling intertwined modules can all be done incrementally, and we spent a lot of time doing this with Sync Engine Classic. Python'southward dynamism can make this difficult, so we added MyPy annotations as nosotros went to gradually catch more bugs at compile fourth dimension. Just the core primitives of the system remained the same, every bit refactoring alone cannot change the fundamental data model.

□Accept yous tried improving performance past optimizing hotspots?

Software oft spends almost of its time in very little of the code. Many performance issues are not fundamental, and optimizing hotspots identified by a profiler is a nifty way to incrementally improve performance. Nosotros had a team working on operation and scale for months, and they had slap-up results for improving file content transfer performance. But improvements to our memory footprint, like increasing the number of files the arrangement could manage, remained elusive.

□Tin yous deliver incremental value?

Even if you decide to practice a rewrite, can you reduce its risk by delivering intermediate value? Doing so can validate early technical decisions, aid the projection keep momentum, and lessen the pain of slowed feature evolution.

Can you pull off a rewrite?

□Practise you deeply understand and respect the current organisation?

It's much easier to write new code than fully understand existing code. So, before embarking on a rewrite, you must deeply understand and respect the "Classic" system. It's the whole reason your squad and business is here, and it has years of accumulated wisdom through running in production. Become in in that location and practice archaeology to dig into why everything is the way it is.

□Exercise you have the engineering science-hours?

Rewriting a system from scratch is hard work, and getting to total feature abyss volition crave a lot of time. Practice you have these resources? Practise you have the domain experts who understand the current system? Is your organization good for you plenty to sustain a projection of this magnitude?

□Can you have a slower rate of feature development?

We didn't completely pause feature development on Sync Engine Classic, simply every alter on the old system pushed the finish line for the new one further out. We decided on shipping a few projects, and we had to be very intentional nigh allocating resource for guiding their rollouts without slowing down the rewriting team. Nosotros as well heavily invested in telemetry for Sync Engine Archetype to keep its steady-state maintenance cost to a minimum.

Practice you lot know what yous're going towards?

□Why will it be better the 2d time?

If you've gotten to here, you already understand the old system thoroughly and its lessons to be learned. But a rewrite should besides exist motivated past changing requirements or business concern needs. Nosotros described earlier how file syncing had changed, but our decision to rewrite was as well forward looking. Dropbox understands the growing needs of collaborative users at work, and building new features for these users requires a flexible, robust sync engine.

□What are your principles for the new system?

Starting from scratch is a slap-up opportunity to reset technical culture for a team. Given our experience operating Sync Engine Classic, we heavily emphasized testing, correctness, and debuggability from the beginning. Encode all of these principles in your data model. We wrote out these principles early in the projection's lifespan, and they paid for themselves over and over.

So, what did we build?

Here's a summary of what we achieved with Nucleus. For more details on each one, stay tuned for time to come blog posts.

- We wrote Nucleus in Rust! Rust has been a force multiplier for our team, and betting on Rust was i of the best decisions we made. More operation, its ergonomics and focus on definiteness has helped us tame sync's complexity. We can encode circuitous invariants nearly our system in the type arrangement and have the compiler bank check them for u.s..

- Near all of our lawmaking runs on a unmarried thread (the "Command thread") and uses Rust's futures library for scheduling many concurrent deportment on this unmarried thread. We offload piece of work to other threads only as needed: network IO to an event loop, computationally expensive work like hashing to a thread puddle, and filesystem IO to a defended thread. This drastically reduces the scope and complexity developers must consider when adding new features.

- The Control thread is designed to exist entirely deterministic when its inputs and scheduling decisions are fixed. We use this property to fuzz it with pseudorandom simulation testing. With a seed for our random number generator, nosotros tin can generate random initial filesystem state, schedules, and system perturbations and let the engine run to completion. And then, if we neglect any of our sync correctness checks, we tin always reproduce the bug from the original seed. We run millions of scenarios every day in our testing infrastructure.

- We redesigned the client-server protocol to have strong consistency. The protocol guarantees the server and customer have the same view of the remote filesystem before because a mutation. Shared folders and files take globally unique identifiers, and clients never observe them in transiently duplicated or missing states. Finally, folders and files support

atomic moves independent of their subtree size. We now have strong consistency checks betwixt the client'south and server's view of the remote filesystem, and any discrepancy is a bug.

atomic moves independent of their subtree size. We now have strong consistency checks betwixt the client'south and server's view of the remote filesystem, and any discrepancy is a bug.

If you're interested in working on hard systems issues in Rust, we're hiring for our core sync squad.

Thanks to Ben Blum, Anthony Kosner, James Cowling, Josh Warner, Iulia Tamas, and the Sync team for comments and review. And thanks to all current and past members of the Sync squad who've contributed to building Nucleus.

Source: https://dropbox.tech/infrastructure/rewriting-the-heart-of-our-sync-engine

0 Response to "How to Manage Simultaneous Uploads in Dropbox Mac -photos -camera"

Post a Comment